Friday, August 29, 2008

Monitoring features from ESX 3.5 update 2

At the same time, I will say this is not fit to my environment. Maybe you guys had experienced the similar situation with the patches release from ESX always Require VMtools to be upgraded. I am still concerning why it require VMtools to be upgraded for any minor or major update from the ESX release. This actually had caused us a lot of time to negotiate with the down time to ensure the VM are patch with the latest version of VMtools. Again, when the VMtools is not updated, the heartbeat will be inconsistent of not detected.

In this case, you will not able to enable the sweet monitoring features been released recently. Therefore, I am currently urge the VMware, trying to reduce the number of time require to upgrade the VMtools. We can patch the ESX with no down time. But, if you need to upgrade VMtools, that will be down time required.

Something VMWare has to be seriously look at about their software development cycle with the tools they release.

Thursday, August 28, 2008

Linux Systems Being Hit By SSH-Key Attacks

According to US-CERT, the attack appears to rely on stolen SSH keys to gain access to a system. It then uses a local kernel exploit to gain root access, whereupon it installs the "phalanx2" rootkit, derived from the older "phalanx" rootkit.

For more information, please refer to this link

http://www.informationweek.com/news/software/linux/showArticle.jhtml?articleID=210201115

Tuesday, August 26, 2008

Efficient Data Center - Virtualize & Consolidation

High Gas/Petrol price become an global issues, no matter which region or which country are you from, this had become and issue which drive the Operational Cost for a Data Center to keep alive from daily basis. In Malaysia, the government had recently increase the commercial electricity for 26 %. Imagine that if you DC consume RM 30000.00 a mount from power perspective, you had to pay RM 37800.00 a month. that had been significant impact the entire IT budgets plus the operational cost. As IT always be the main driver for most business today, we may need to provide a highly efficient Data center solution which able to recover the ROI within the shortest period as possible. Virtualization & Consolidation had come to the point for this.

As if we compare the power consumption from major chip maker like AMD and Intel, the watts per processor had stack and remain the same for the last 3 generations at least. The only improvement they made was really the performance per watt. 6 cores and 8 cores had been down the road, I will be strongly encourage who had not adapt to virtualization need to start virtualize or prepare to virtualize 80% of thier production environment.

My environment currently had running the entire DR solution with VMware technology. In production, I do have more than 50 production VM which provide web hosting, middleware, file & print servers and etc. Imagine you had a tight budget with only 50k USD, how many of physical servers will you able to buy from there? and wat about the amount of power you need to absorb on monthly basis? That is the real cost as many of you may had not see the electric bill. For my case, my cost center had to paid for the power bill ourselves.

To achieve 15:1 in VMware is no longer a big thing with the high capacity server from DELL, HP and IBM. By doing this, you can avoid the multi million renovation to expand your DC which may had nearly full. Technology is cool, but again as human being, we had to work smart. High efficient Data Center sometimes do equivalent to High Productivity of the support team too.

Centrino Vpro 2

Most of you guys may had already known the benefit of this product, if not, you can go to www.intel.com to check this out. I would like to share my opinion here about the disadvantages about this products. The biggest concern's is privacy and security.

Let's imagine, as today, we had shut down our machine before we left the office most of the time. With Vpro2, the sys admin will able to remote power up your machine when you are not aware off, and perform the patch activity and any type of the administration job require. sys admin point of view of course is life easier, but what about business users cross different organization with data sensitivity? had we really think detail about this? many of the users are store thier document locally on the machine, although they have the share drive to be encrypted or protected. this could be big potential for someone to access to the machine without user notification.

As sysadmin will always have the administration rights on most of the desktop or laptop. Without Vpro, they may not do this without user agree with the remote access, because you will see the screen moving or been log on by someone else.

Again, I will say that the security is something Intel has to think about to enhance their products. Cool solution but it may not cool for everyone or every environment.

Monday, August 25, 2008

How Hyper-V quick migration fails

http://malaysiavm.com/blog/how-hyper-v-quick-migration-fails/

Please click on this to have a look

VMware ESX 3i licensing details

SSH on ESXi

1. Go to the ESXi console and press alt+F1

2. Type: unsupported

3. Enter the root password

4. At the prompt type vi /etc/inetd.conf

5. Look for the line that starts with #ssh

6. Remove the #

7. Save /etc/inetd.conf by typing :wq!

8. Restart the management service /sbin/services.sh restart

Try it!

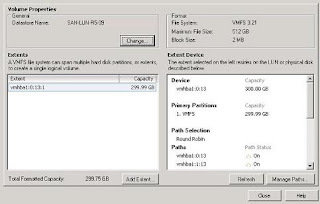

ESX and VM Guest - Round Robin Storage Setting

ESX Hosts

To enable this on ESX host, you need to browse to the configuration tab of the ESX host, and right click the data store and select properties, and click on manage paths option in the GUI wizard. Click on Change button after that, and choose the Round Robin (Experimental) option and click OK. You will need to go through this process 1 by 1 to ensure you had round robin from each ESX host to each of the VMFS Data store.

VM Guests

Just right click the VM

and choose edit setting, and select to the hard disk which has shown as Mapped Raw LUN on the summary tab. Click on the Manage Paths and follow by the change button, and same you can easily

configure to have the Round Robin enable.

Saturday, August 23, 2008

Update Manager - VMware Virtual Center for Patching Activities

After couple of months we had performed the patch activities for our ESX hosts and VM guests by using the Update Manager, here is my review of the Update Manager from VMware.

Update Manager had simplified the life of the system engineers who manage the VM farm with the huge number of VM guests and ESX hosts which may require a frequent patch update. Before the Update Manager released, most of the time we had patched the server by using satellite servers, Altiris, SMS and others patching tools. That will require additional cost required to be implemented on the VM guests or esx host due to the licensing agreement from the vendor.

Update Manager is fully compatible with VMware ESX patches update for ESX 3.0, 3.5 and ESX 3i. From the Host level, you will able to get all the patches downloaded by the update manager schedule task once the VMware had officially release their patch on their official system. Update Manager had also integrated well with Microsoft patches as well as others famous software patches like Red Hat, Adobe and etc. It even allow us to patch the template image which we store for deployment purpose, without manual interaction to convert the template back to virtual machine. If you try to patch a windows 2003 template image, the entire process is fully automated. This is really impressive. I had also patch my DR servers which is 30 miles away from my major Data Center, and we had 30 Mb MPLS across the WAN, it worked perfectly without any issue at all, and of course, the patching timing will be slightly longer due to the location of the DR servers.

To get the update manager deployed in your environment, here is couple of step you may need to configure or enable.

A dedicated DB for update manager in the SQL or Oracle - Depend on the choice of database servers you are using. This Database will store all the information and patches to be used for patching purpose. If you have proxy server in your environment, you need to configure the proxy address and port number in the virtual center configuration for Update Manager. Schedule task to refresh and check the latest patches release from the official site, recommend to run the schedule task at least once in a week. I do schedule it to be run on weekly basis, to ensure you getting the latest patches when you try to patch you VM guest or ESX host.

Baseline - baseline is been use to define the patches required for specify product or platform by the update manager. ESX host baseline is been built in by default and categorize under Critical and Non Critical. You are also require to create you own baseline for specify OS and software you are using.

Please make sure you had update manager plug-in install on your virtual infrastructure client. To attach the baseline to the ESX or VMs you would like to deploy, you need to switch the view mode to Virtual machines and template mode, then select the system you would like to patch, and click on the update manager tab on it, and start attach the suitable baseline on it.

After you attach the baseline, right click the virtual machine or ESX host and select Scan. Scan will not actually apply the patches, this is allow the update manager to compare the current patch level for the ESX hosts and Virtual Machines and preview of the number of patches needed to be applied to be compliance. After the scan result display, right click the machine and select remmediate. This will start to apply the patches automatically.

For ESX hosts, you need to Vmotion all the VM guests to another ESX host. This will provide 0 down time during the maintenance, thanks to the cool technology by vmware on Vmotion. This had worked for me all the time. Once the ESX hosts is ready, is recommend to send the ESX host to maintenance mode, then start the remediation after that. Once the patch is completed, it will show the ESX host at a different patch level or update code by vmware release. You can verify this with the VMware website information easily.

For VM guests patching, down time will be required as usual, due to the reboot require from the operating system perspective. Again, this tools is bundle together with the Virtual Infrastructure by VMware, is really useful for the VMware engineers to patch thier VM guests.

The only disadvantage at this moment, SUSE linux is not supported by update manager. According to the VMware, they will soon release the next version of update manager to support patch activities on SUSE Linux VMs.

Resolution - ESX hosts unexpected disconnect from Virtual Center ( ESX 3.5 update 2 )

When I try to log in to my virtual center to verify my VM farm today, the virtual center show my ESX host had been disconnected from the virtual center by itself. The ESX host itself should be running in critical mode as production and had HA and DRS enable on the cluster. The 1st thing I try to verify is to ensure all my VM and the ESX host is still in production mode, and yes, all the VM is not been down and it still run as normal while it disconnected.

Here is what I did to reconfigure my ESX host and re-join it back to the HA and DRS cluster in my production farm.

Disable the HA and DRS features from the cluster, and totally remove the ESX host from the inventory on Virtual Center server. Follow by that, I SSH in to the ESX host with su -, then I path to the /etc/init.d and look for the services mgmt-vmware status command

It show the services is running. Then I issue the command services mgmt-vmware restart. This will take couple of minutes to get the service fully restarted. At the same time I had actually Remote log on to 1 of the VM to ensure no impact on the VM guest which sit on the ESX host. The result is perfectly work without any downtime on the VM guests, and should credit to the ability from VMware technology.

Once the services restarted, you can easily add host to the virtual center and reconfigure the HA and DRS cluster mode again. The ESX host is back to normal now and work perfectly as usual.

Friday, August 22, 2008

Virtualization - Network, Storage, Data Center

Virtualization become a MUST for every single environment which run a small, medium or huge Data Center today. Even we can see the grow at the level of small medium business as well as some manufacturing plant too. VMware as the technology and market leader, it had provide a lot of new flexibility in term of reducing carbon foot print, green IT initiative, as well as CAPEX saving and etc. It had been huge saving from every single different perspective. Somehow, it had created complexity in term of Data center, Power & Cooling, Storages, and of course the complexity and extra workload on the engineers who support the environment.

Many of the case study happen today, we do see that users or the implementers had not for see some important hidden potential issue during the earlier stages of deployment. The fast grow of the storages, the higher bandwidth required, specify cooling and power needed to be supplied for the Virtual Infrastructure zone in the data center as well as the operation support task needed to be carried on as daily basis.

A success architect for Virtualization had to been specialize is all those area I had stated above to ensure the entire deployment will able to achieve all the goals had been set during the project planning. Some how, we do need to urge the employers to send the system engineers for further training in multiple products, example like VMware training, Linux administration, Microsoft Training as well as Network Training plus Data center training too. That is all the necessary requirement for the engineer to able to support, manage and plan for future extension of the virtualization farm.

As to share here, a bad design on network will have slow performance impact to the network and DC, as well as bad configuration on storage could become corruption risk as well as I/O performance issues. For Data Center perspective, the power and cooling will be really concern as the storage and ESX host will require higher power to be operated, a proper power planning needed to be in place to secure the production environment of course.

Complexity = challenging, and is very depend on the management team to coupe with the technology change as well as the challenge to keep the engineers interesting of their work from time to time.

Simplify IT doesn’t meant the best solution too.

6 cores and 8 cores CPU licensing module for VMware

Thursday, August 21, 2008

Microsoft To Invest Up To $100 Million In Novell Linux

Microsoft To Invest Up To $100 Million In Novell Linux

This latest news had really show that Microsoft is becoming desperate to inter operational with the open source world - Linux and Novell Netware. Glad to see as it no longer a major Software Giant like Microsoft to be monopolize and now I believe customers will always have more choice for every single system they will deploy in the future.Latest update from Microsoft - Server Virtualization Validation Program

Participating Vendors

The following companies who supply server virtualization software to the marketplace have formally committed to participate in the Server Virtualization Validation Program. Microsoft is working with them to validate their solutions as platforms for Windows Server 2008. Please contact them directly for any additional information.

- Cisco Systems, Inc.

- Citrix Systems, Inc.

- Novell, Inc.

- Sun Microsystems

- Unisys Corp.

- Virtual Iron Software

- VMware, Inc.

System, Storage and I/O compatibility for ESX3i and ESX 3.5

http://www.vmware.com/pdf/vi35_io_guide.pdf

htto://www.vmware.com/pdf/vi3_35/

http://www.vmware.com/files/pdf/dmz_virtualization_vmware_infra_wp.pdf

Here is some of the compatible document been release for references. Is really helpful for everyone who plan to implement the virtualization on VMware

Wednesday, August 20, 2008

Microsoft Should Set Windows Licensing for Free

Again we should cheer and encourage the knowledge sharing as well as the open source world for the success of today. As you may know, part of the HyperV are actually came from the open source communities R & D.

Rumours - VMware will acquire Redhat

As an good example from Novell which promoting the Xen virtualization bundle with thier SUSE Linux Enterprise. This will provide the need of Operating system as well as the hypervisor virtualization technology. Hopefully we will see the action really in place as VMware acquire Redhat and will have more products on board in the futures. The key point of VMware are still the expensive licensing module which could potential stop the potential buyers always.

Microsoft Servers on VMware

Microsoft said they will support the windows machine which run on virtual server, but they refuse to support the VM which run on VMware. As consumer point of view, that will really as a show stopper to a lot of the potential customer to host thier production environment with more windows server in the future.

Compare Microsoft with Novell SUSE Linux, Novell really do a great job providing full support to the customer who have subscript for support every year, and they will support any virtualization platform that you will host your SUSE Linux.

As Linux as strong growth from time to time, this announcement from Microsoft will not drive up the market percentage they have on the virtualization as well as servers products. It will just create another concern to all the users when they do want to implement a new project, and Microsoft will not be the 1st choice mostly.

Tuesday, August 19, 2008

SUSE Linux and Microsoft AD integration

Sudo is perfectly work as long as the right configuration are in place for the sudo files. I am currently plan to implement this solution to some potential server which require user ineraction log on to the Linux environment. This will able to simplify the samba share, log on authentication and of course we will not require to manage users locally. Biggest advantage will be simplify the compliance method to be able to authenticate with Microsoft Active Directory. Single sign on seems to be workable in this case

Time Synchronize on VM and ESX

- Recent major bugs from VMware had encountered exprie issue which disallow VM to be power on or Vmotion. What I had done personally is " stop the NTP synchronization from esx host, and change the date back to 5 days back before the VM expire". This easily solve my issue and allow me to Vmotion all the VM machine to another destination host without any interruption. Can you imagine if you sync all the VM with ESX host, you will lose this flexibility and in the big pain to figure out, how would you able to simplify the patch process to fix the bugs even VMware had released the bug fix

- I still do not see any potential issues with the current configuration that we sync all the VM and physical servers to multiple NTP servers we do have in our environment. Standardization always important as if we can minimize the different method of support and maintenance, it will help to reduce complexity.

- If you VM is running on windows, the VM will direct synchronize with the time servers in AD by default, and I don't really see the potential need to create another specify way to synchronize the time on the VM.

Best Server Model for Virtualization - R900, R905

Couples of important point that need to be consider during the implementation of Virtualization is :

- Hardware compatibility

- Storage choices

- networking equipment

- System architecture

- Support and operation maintenance

- CPU model and brand ( AMD or Intel )

DELL had recently launched the R900 and R905 this year specifically to support for 4 physical CPU sockets which able to host up to 16 cores of CPU with the latest quad core technology from both Intel and AMD which allow the memory to scale up to 256GB of physical memory if you go for the 8GB/module in DIMM slot. 1 interesting you may need to look at the servers is the on board 10Gbps capable network ports. The server itself had come with 4 on board NICs with capable to run the next generation ethernet 10Gbps. As network bandwidth become a big challenge after the virtualization, this connection will provide better cabling management and of course higher throughput once the 10GbE in place. The additional PCI slot on the 4U servers do provide flexibility to add-on fiber channel HBA or additional Ethernet card to provide redundancy and fail over path for HA purpose.

I will also suggest to stick with 128GB memory instead of 256GB as you may experience CPU bottleneck once you have the higher number of VM running on the production ESX. Guess what, a lot of the consultant will always encourage we configure 1 Vcpu or 2 Vcpu as starting for each VM we created. But in real case, we will have to make it to 4Vcpu or 8Vcpu in the future, that will due to the business grow and data processing increase from time to time. If we virtualize the servers and sacrifice performance, that will not become a big selling point for virtualization. As you may need to re-think, the storage for VM is mostly running on SAN or ISCSI, which may be more expensive solution compare to local HDD.

4 physical CPUs and 128GB memory is the best option at this moment as if you plan for HA or DRS in your environment, as a safe play to minimize the down time, I will not encourage to host a huge number of VM on 1 single hosts. You may need to consider if you have 40 VMs on 1 single host, the hardware failure on 1 host will bring down the 40 VMs which is in production.

For warranty and services, DELL always commit better services to my company with a more reasonable and affordable pricing. you may enjoy the same benefit from HP but you require to pay more in that case. For IBM, I will say x86 platform servers are just not their focus, as they always slow respond on bug fix release on their firmware or driver on x86 platform.

Monday, August 18, 2008

High Availability (HA) and DRS in ESX

there is an incident for myself that the HA keep disconnect and reconnect automatically for every couple of minutes. The work around is you need to make sure the hostname that you configure for your esx server is still with lower case or upper cases. If you do have mix environment with upper case and lower case in the physical cluster for ESX, you will experience this technical issue due to the way of the HA communication had been tie to the DNS name. In linux, case sensitive is always apply

For DRS, is not recommend to configure to aggressive level as during the vmotion, it do generate the overhead and network traffic for vmkernel port for both original host and destination host. 1 of the test I had done previously, I Vmotion 20 VM to another host in 1 time, and I do face the CPU bottleneck which stop the vmotion activity. Advice here is to Vmotion 1 by 1 if you are not in rush as it will generate less overhead for the vmotion activity and of course higher success rate.

VMware Lab Manager VS Virtuozzo

As virtuozzo claim it as the Virtual Private Servers which share the same operating system, library files from the OS and template base technology which able to deploy a VM within or less than a minute. Obviously, Lab manager is not really the new technology in the market to provide this as I had experience with Virtuozzo since 2 years back on this. Of course, VMware did the best marketing job to reach the customer worldwide.

Lab manager provide version control and multiple sets of Server with Fence technology (NAT) and IP mapping to prevent hostname and ip conflict if you require to connect to the production network for your development and test environment.

IP mapping is some cool stuff example you may have 2 different VM with same IP as 10.1.1.2 in the Lab manager, but both of different version of sets of VM which link from the original image, there will not conflict to each others while you enable the connection to the production network, that was due to the Fence, whick the connected to a seperate virtual NAT network, and it map to a different production IP to reach the production network. In order to do this, is highly recommend to reserve a pool of IP for lab manager to be auto assign when needed.

Virtuozzo could be categories as a different approach in VM technology as some of the guys call it as VPS, virtual private servers. It share the same OS base, library file and etc. Central Server management able to be done on the management server, and it provide flexibility to manage the VPS online most of the time. Virtuozzo is more towards the template base technology as you need to have the template ready when you need to deploy the specify middle ware or applications.

Lab Manager is suitable for large scale development and operation support environment. Vendor base will be easily helpful on the technology been built on top of lab manager. Cost of the licensing skim seems to be the show stopper for SME or medium enterprise which may not focus on IT business. The current licensing skim will require to be built on top of the ESX enterprise edition with additional CPU sockets for Lab Manager. Again, its cool technology providing is suitable with cost and effective.

ESX 3.5 VS ESX3i

Couple of important point when we compare ESX 3.5 to 3I

- Do we have redundancy or Raid 1 for the memory flash card been built in to your server? Which you can also install on the Raid 1 Disk on the installable version, but this will wasted the hard disk that you bought

- How confident will VMware to guarantee with zero bugs on ESX 3i? (guess is not possible)

- 3i is purely flash or Rom basis from the way it boot up. Minimal interaction can be done from management perspective even you are in front of the server. As in today, ESX 3.5 are still able to troubleshoot with Linux command and tweak around with the bugs we have. in 3i, seems we have nothing much can be done beside waiting for patch or flash release from VMware

VMware over ISCSI storage - Equal Logic

Recently there had been strong grow and push for the 2nd tier ISCSI storages from the SAN Storage company like Netapps, EMC, DELL, HP, IBM and etc. A lot of the white paper and marketing brochure had been publish and been communicated to the public in worldwide, which claim the VM is out perform with the ISCSI VS Fiber Channel SAN.

I would like to share couple of my finding in the real Prove of concept and test that myself had personally setup and experience here. VMware do support hardware base ISCSI HBA and software base ISCSI through its VMkernel. Couple of thing that you may want to consider before we really decide to proceed further with the ISCSI. Networking become more and more important since the last decade to be operate most of the important pieces in the Production Data Center. Networking had also become the major issue for most of the time especially in VM environment which may need a bigger bandwidth to support the multiple VMs that been consolidated to single Physical Host.

I had been invited by the Equal Logic vendor to run the real test with the demo in my datacenter. Below is the major finding I would want to share

Test Equipment

Server - DELL PE 2950 8GB and 2 x Quad Core 2.0 Ghz

Storage Switches - DELL Gigabit Switches

Storages - Equal Logic PS 5000 with SAS HDD

Operatin System - SUSE Linux, Windows 2003, ESX 3.5

Impressive

- High Processing speed due to the processor build in for each storage bay. It can be scale up to 12 storage bay in the cluster basis. Each enclosure contains 16 physical spindle drives with SAS technology

- Simplify management - the entire process to configure the storage to be useable is less than 15 Mins. Its all web base and able to run on the open source browser such as firefox

- Maximize through put through the software ISCSI initiator been tested on servers and my personal laptop. It able to suck up 97% of the gigabit through put from my laptop gigabit connection and the server gigabit connection as well.

- High redundancy with global hotspare configuration recommended

- Impressive load balance feature which able to scale from storage and perfomance perspective. Additional enclosure will provide additional spindle power and processing power from the stroage bay. Compare to Clariion series currently, which always provide slow perfomance when the storage are only 60% populated.

- Shorter commissioning process and reduce provisioning time line. almost plug and play

- RAID 50 available as an option to be configure during the provision process

Disadvantages

- Now is time to think about long term strategy in Data Center before it become another mess as old days which we manage the Fiber Channel without director switch with fabric port. In order to gain the perfomance and scalability, each of the network connection, uplink and downstream will direct impact to the through put for each storage box. In Fiber Channel world, as if you run in 4Gb FC switches and cascaded, you will at least have 8Gb redundancy and load balance trough put from switch to switch perspective. In ethernet, we may have to look at 10Gbps uplink from switches perspective. Conclusion, the switch uplink will direct impact the entire storage throughput to the client

- As you may start to manage the ISCSI with distributed or seperate from the normal ethernet in DC, this could generate extra workload from management perspective as well as support operational overhead

- ISCSI over 10GbE are in roadmap but yet to be release, as the current gigabit ISCSI price seems to be little bit too high to be compareable with the fiber channel SAN.

- Software ISCSI had higher overhead in the Server resources compare to FC HBA storage

Summary

My opinion here is to wait for the 10GbE release on the ISCSI before to really decide for ISCSI to replace the major FC SAN Storage for High Availability purpose. As for now, it may be suitable to be deploy in Disaster Recovery Solution, Development VM Farm and Unmanage location example factory, branch office which do not have the complete Data Center solution. This is specifically for solution related to ISCSI in VMware.

VMware VMFSVs RDM ( Raw Device Mapping )

Recently I had read a couple of article regarding the perfomance camparison chart from VMware, Netapps and some of the forum communittes, I do really find out the real perfomance is much different with the technical white paper that I read before this.

As for the today, more users are actually deployed the mission critical and high I/O servers on the virtualization environment, but we do see some I/O bottle neck which cause by the storage perfomance always. VMDK do provide flexibility from management perspective, but it does sacrifice the perfomance you may require for your databases, files transfers and disk perfomance. I had run a couple of test with real case scenerio instead of I/O meter that been always use widely, and here is the summarize result I would like to share.

In disk perfomance, we always split it to 2 categories as sequential and random I/O. in sequential mode, you will see the huge different while you try to perform the file transfer locally or through network. My test environment is running with SAN storage from fiber channel with same LUN size and raid group which are created from the Storage Level. The only differences is VMFS Vs Raw.

Raid Group design 7+1 raid 5 configuration and run on MetaLun configuration

Each LUN size is 300GB

Perfomance monitoring tools = Virtual Center Perfomance Chart

VM Test Machine = 4 Vcpu, 8GB Memory

Operating System = SLES 10 x32, x64 ; Windows Server 2003 x32, x64

Sequential : RDM is out perform VS VMFS as it able to achieve > 2 times higher through put during the file transfer locally on the VM

Random I/O : The Raw Device Mapping is still out perform the VMFS and getting the similiar through put with sequential file transfer. Multi session with random database query is been executed in the test

for NFS file transfer from VMFS to VMFS, I do see the bottle neck happen much more earlier than RDM.